The system did not respond anymore because it was offline. I am still not sure what exactly happened. "I assume it that it was a corrupted disk. txt document I use for Communities is currently at 322844 words :smileygrin: (though only about ~70% of this are my words as I copy some snippets from responses))

Thanks for taking the time to write detailed information - there is no such thing as a post/reply being 'too long' as the more detail the better.

#Vmware vcenter converter standalone 5.5 update

How does vCenter / ESX actually detect that a consolidation is needed? Is it just based on the file names? If I were to rename SERVERNAME_2-000001 to SERVERNAME_3 and then update the VMX file, should that be working and the vCenter warning be gone?Īpologies for the late reply - while I am a VMware employee (GSS-EMEA-vSAN) I don't post on here during work hours and only *sometimes* have the available energy to think about snapshot issues (as it reminds me too much of my older roles than present). For the same reason, I don't want to run the consolidate option in the snapshots menu. I've been reading a few KB articles and some seem to suggest to create a new snapshot, and then delete it, though that seems a bit risky for me on this server, as I don't have a backup. I have no idea if the names were already botched up in the beginning or if that is an outcome of the failed backup job. VCenter tells me that the server needs a disk consolidation, but I'm too afraid to run the consolidation, fearing that it might attempt to actually consolidate disks SERVERNAME_2-000001 and SERVERNAME_2 together and that this might have been the issue to begin with (when starting the VEEAM backup job). It is actually referenced in the VMX file: But that is not the case, it is the actual disk of the operating system whereas SERVERNAME_2.vmdk is the disk of an application data partition. What catched my eye is that despite the fact that vCenter doesn't show any more snapshots, there is still a SERVERNAME_2-000001.vmdk there, suggesting a snapshot of disk SERVERNAME_2. It took us quite a while to figure out what needed to be done to get the server back running again, but once the server was running again we had the following disk files lying around: I am still not sure what caused the corruption, maybe there was an pre-exsting condition with the VMWare disk files that caused the corruption. It kept doing that, because the disk was now corrupt and the server kept freezing during the boot procedure. After a few minutes, it switched back to the other ESX again. Upon further inspection I noticed that the HA cluster, for whatever reason, decided to do a failover to the other ESX node. That approach failed horribly.ĭuring the VEEAM backup job the legacy server stopped responding.

On a weekend I attempted to start a backup while the server was running in the hopes that I could do incremental backups before finally migrating the server to the new data center. I've got a 1GBit connection between the 2 data centers.

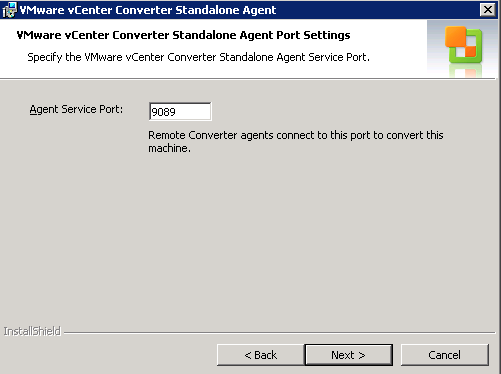

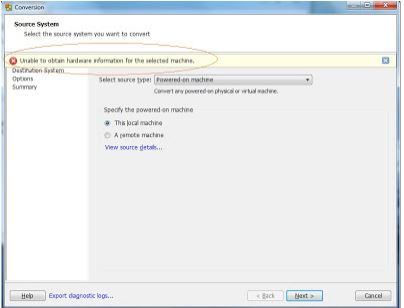

I am using VEEAM because the vCenter in the old data center is so old, that I have trouble getting the Standalone converter to both read from the old data center and copy to the new one (SSL issues for example).

I planned on using VEEAM Backup to move the server from the old data center to our new one (back up from old data center, restore on new data center). The old data center is running a vCenter 5.5.0 with 2 ESX hosts, the new data center uses vCenter 7 with 4 ESX hosts. I have almost no knowledge about the infrastructure in our old data center and it seems that there is no working backup there (I wasn't working here when that data center was set up and nothing is documented). I've got a big (2.5TB) legacy server in an old data center that I need to move to our new data center.

0 kommentar(er)

0 kommentar(er)